Scottsdale based digital Marketing agency

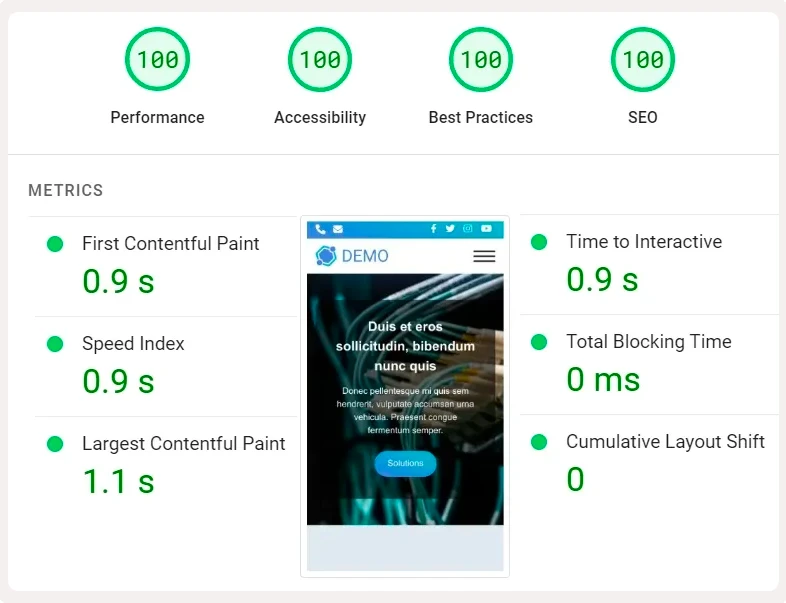

✓ Website development: Branded, fast, and secure

✓ SEO & PPC: Local or national SEO and ad campaigns

✓ Technical support: Ensuring your site is always on

✓ On-demand: Standing by for whenever you need us

Boost your sales

With our digital marketing services

SEO

Optimize your site code, content quality, E-E-A-T, and add built-in automation with AI for local & advanced SEO.

Website development

Create customized websites and themes at scale for Shopify, Woo, WordPress, and Magento with built-in automation and AI.

Paid ads management

Maximize your online presence and boost engagement with our expert Google Ads and social media campaign management.

Custom plugins & APIs

Create any type of functionality for your company website with custom plugins, apps, and API integrations.

Project scoping

We do it right the first time with accurate time estimation and resource allocation to win the client's (your) expectations.

Technical support & IT

Is something broken or a site hacked? Our IT Team is always standing by on Slack, email, or text to handle the fire.

How are we different?

100% accountability

We're not a big agency with high employee turnover. Instead, our CEO & CTO actively participate in every project.

Strict best practices

We follow every platform or CMS for updates, read the SEO news daily, & connect the dots to deliver dependable solutions.

Relentless innovation

Every project is handled by a 20+ year specialist utilizing manual expertise with AI and automation to solve problems others won't even try.

And peace of mind

We sign an NDA and Non-Compete before you provide our agency access to anything.

Based in Scottsdale Arizona

At Kanaan & Co., we're not just a local business but an integral part of the Scottsdale community. Our approach to digital marketing is deeply rooted in understanding the local landscape, allowing us to tailor our services to meet our clients' needs. By building long-term relationships and leveraging our expertise to support local businesses, we aim to contribute to the growth and success of our community.

Address: 7702 E Doubletree Ranch Rd, Scottsdale, AZ 85258.

Phone number: (602) 551-6866

Digital agencies everywhere

Trust us with their clients

"Kanaan & Co. is a strategic partner of our agency, and the team is a breeze to work with. We feel like they're in-house."

Jeremy Ginsburg - Founder & CEO, NoticeUMarketing

A digital agency from Las Vegas

Check out

A few of our agency's latest projects

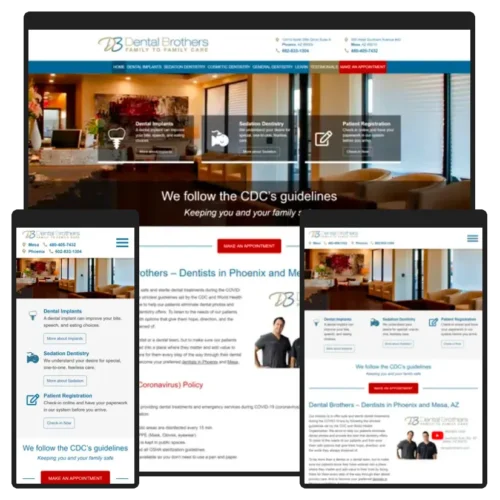

Dentist website in Arizona

High PageSpeed score | Custom theme | Responsive | Mobile first design | Data migration

Agency client in Arizona

High PageSpeed score | Custom theme | Responsive | Mobile first design | Data migration

Another agency client website

High PageSpeed score | Custom theme | Responsive | Mobile first design | Data migration

Some case studies

Optimized theme

Before and after comparison

View a one-to-one comparison table of an agency client site, before and after our custom theme optimization and migration.

Healthcare case study

600% Growth

Watch how our marketing agency helped a private practice with two locations in Arizona to increase organic traffic by 600% with digital marketing superpowers.

eCommerce case study

400% Growth

See how a leading retail brand tapped into our digital agency to grow its organic traffic by 400% and conversion rate by 30% with a SEO, SEM, and high-end web development.

Get a Free Proposal